Persistent Storage for Kubernetes Compared: Local Disk vs. Enterprise Storage vs. Kubernetes-Native Storage

In the age of cloud computing, Kubernetes has emerged as the go-to platform for container orchestration. As an increasing number of enterprises are operating databases and middleware on Kubernetes, the need for persistent storage solutions compatible with Kubernetes is also on the rise.

Which storage products are suitable for Kubernetes? Can enterprises use existing storage products such as local disks and NAS? How do these storage solutions perform on Kubernetes? In this article, we will examine three popular storage options - local disk, enterprise storage, and Kubernetes-native storage - and compare their capabilities of providing storage services for containerized applications.

Local Disk

Users can directly use local disks on the server as Kubernetes storage. With a short I/O path between the disk and the application, the local disk features high storage performance. RAID can also prevent data from being lost when single-disk failures occur.

However, the local disk has critical flaws in its availability, scalability, and resource utilization.

- Incapable of providing node-level availability: If a physical node fails, applications related to it cannot be restored on other nodes. Although business systems can increase availability on the data plane, it also makes the whole system more complex.

- Unable to meet the agility business demand of Kubernetes: A local disk’s storage capacity is limited by its size. To scale out, users have to add disks and manually change Pod configurations, which is complicated and time-consuming. To further improve the availability of disks within a physical node, users need to deploy RAID. This makes it difficult to deploy sufficient storage for a large number of applications within a limited time period and budget.

- Heavy O&M burden and low resource utilization: Both the deployment and fault recovery of local disks require intensive labor efforts. Additionally, it is difficult to fully utilize storage resources as they cannot be shared across nodes.

Overall, using local disks on Kubernetes is only suitable for small-scale trials in the initial stages of containerization or as data storage for lower-priority applications. It is difficult to widely use this solution in large-scale production scenarios.

Enterprise Storage

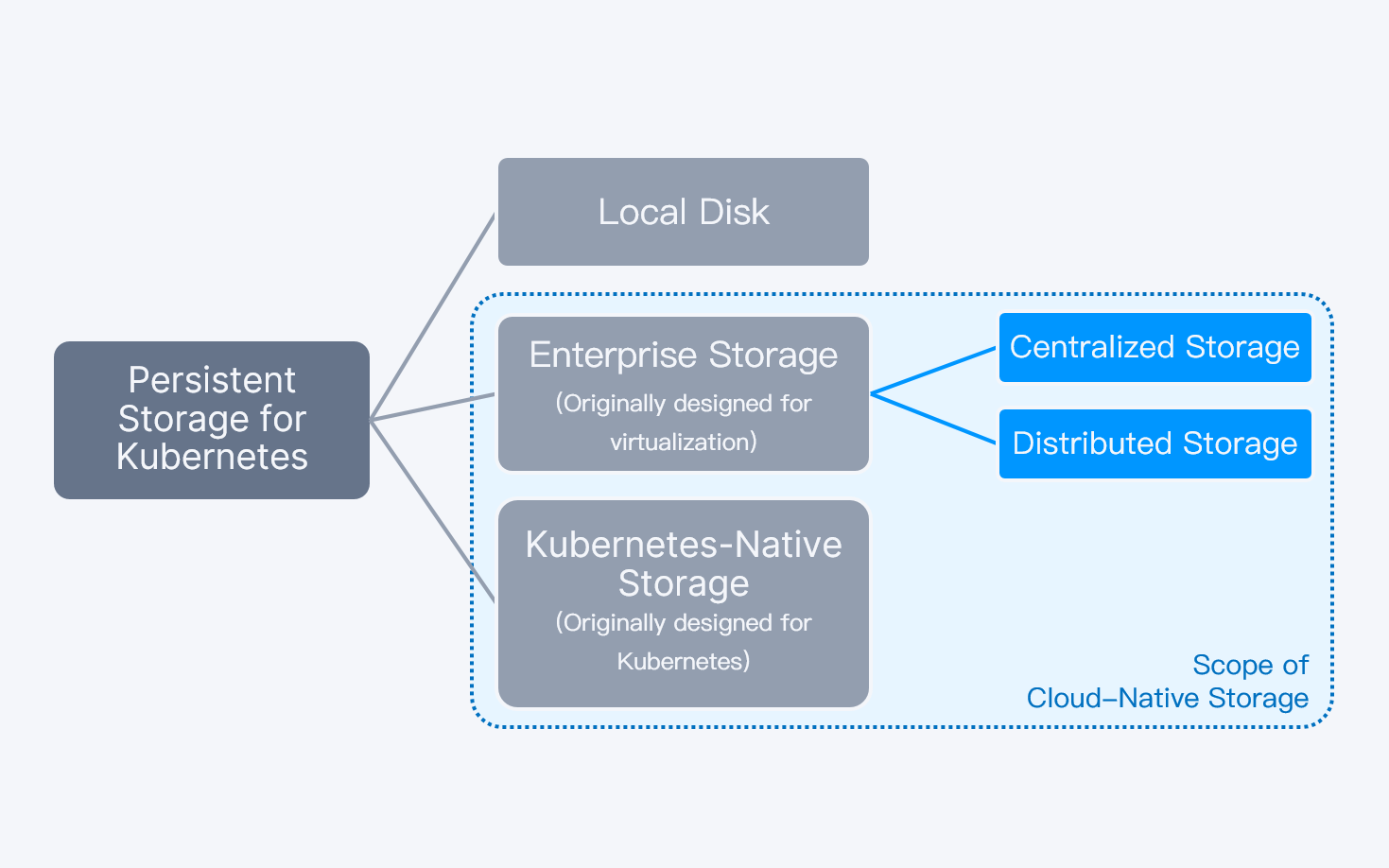

Another way to provide persistent storage for Kubernetes is to integrate Kubernetes with the underlying storage infrastructure via the container storage interface (CSI). This standard allows Kubernetes to provision and configure storage - regardless of make or model - dynamically. These storage systems, according to the GigaOm report Key Criteria for Evaluating Kubernetes Data Storage Solutions v4.0, basically have two broad architectural categories: enterprise and Kubernetes-native.

Enterprise storage refers to storage solutions that were originally designed for virtualization and have expanded their capabilities to support containers through CSI plugins. These solutions include both software-defined storage (e.g., distributed storage) and traditional storage (e.g., centralized storage). Unlike Kubernetes-native storage, enterprise storage solutions are not natively designed for containers; most of them are existing storage used in virtualization. This gives enterprises the convenience of deploying storage for Kubernetes with less investment and configuring efforts.

However, as GigaOm mentions in the report above, “these (enterprise storage) systems, while they do have CSI support, are of a different generation and architecture than the applications they run.” They are still restricted in dealing with the dynamic nature of container-based workloads. It is also noteworthy that the Cloud-Native Storage certified by CNCF includes both enterprise storage and Kubernetes-native storage. As these two types of solutions differ significantly, we suggest that users should pay more attention to product selection and comparison.

Centralized Storage

As a widely used enterprise storage solution, centralized storage (e.g. SAN and NAS), compared with the local disk, increases availability and resource utilization due to shared storage and advanced features like snapshot, cloning, and DR. But its controller-based architecture and rack-based deployment still limit its performance and agility.

- Under high concurrency, storage controllers can cause performance bottlenecks. To deal with a large amount of concurrent access, users need to deploy multiple sets of centralized storage systems, resulting in surging storage O&M costs.

- Deployed in racks, centralized storage requires high O&M efforts in scaling and handling numerous Volume creation and deletion requirements in a short period of time.

Distributed Storage

Distributed storage, by storing data across multiple independent devices on the network, offers excellent scalability and agility. It surpasses centralized storage in terms of performance and high availability when integrating with cloud-native applications based on distributed computing architectures. This is why Gartner emphasizes in its report How Do I Approach Storage Selection and Implementation for Containers and Kubernetes Deployments that storage providing cloud-native data services should be “based on a distributed architecture that can be deployed at any scale”.

However, the market is flooded with various distributed storage solutions, and some products are merely the simple repackaging of open-source solutions. Their performance, stability, and integration with Kubernetes often fail to meet production-level standards. It is recommended that users focus on storage solutions with individually developed technologies, and comprehensively evaluate the product's performance, availability, reliability, security, and ease of O&M.

Kubernetes-native Storage

Unlike enterprise storage, Kubernetes-native storage is specifically designed for the container environment. These solutions are closely integrated with Kubernetes and can provide container-granular data services and automatic storage provisioning operations. Thus, generally, Kubernetes-native storage can better satisfy containerized applications’ needs in agility and scalability than traditional enterprise storage.

Currently, users can either choose from open-source Kubernetes-native storage solutions like Rook (based on Ceph) and Longhorn, or close-source enterprise Kubernetes-native storage solutions, for example, Portworx and IOMesh.

Although both can provide data services in a Kubernetes-native way, distinctions exist in cost and support services; on one hand, open-source solutions eliminate procurement investment, allowing enterprises confident in their technical skills to independently develop applications with the help of the community, while on the other, they cannot provide professional support services as quickly as close-source vendors if significant failures occur.

Besides, compared with open-source solutions, close-source solutions can show better performance and stability due to enhanced technologies. To learn more about performance tests of mainstream Kubernetes-native storage solutions, please refer to our previous blog: Kubernetes Storage Capabilities & Performance Analysis: Longhorn, Rook, OpenEBS, Portworx, and IOMesh Compared.

Comprehensive Comparison of Three Solutions: Advantages and Disadvantages

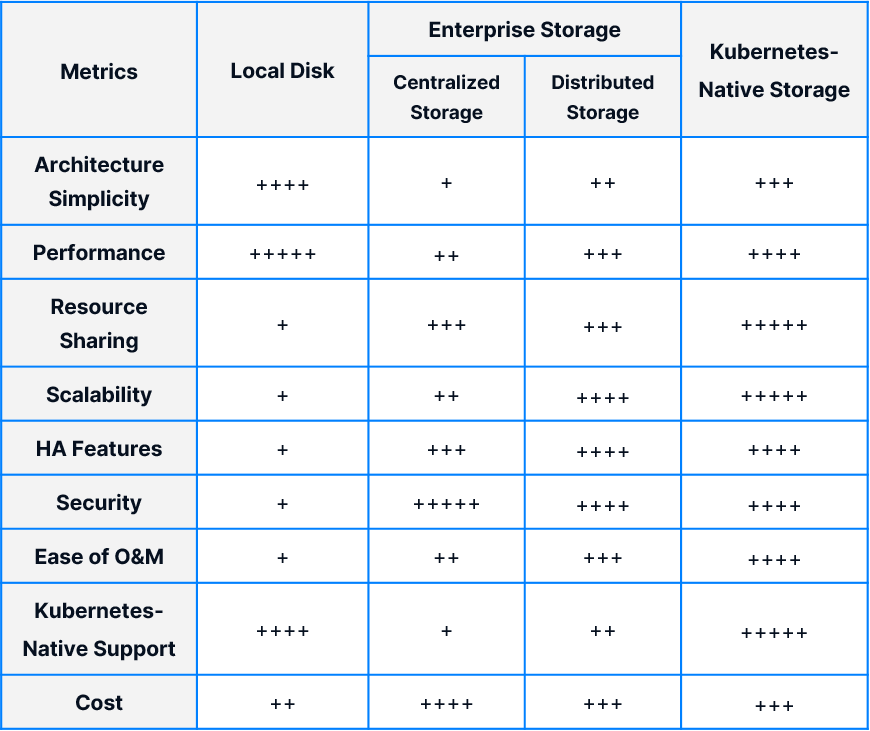

Based on the above analysis and the basic requirements of critical applications (data services) running on Kubernetes over storage services, we comprehensively compare local disk, enterprise storage, and Kubernetes-native storage in terms of architecture, performance, resource sharing*, scalability, availability, security, ease of O&M, integration with Kubernetes**, and cost.

* Refer to the capability of flexible cross-node scheduling of stateful applications and concurrent read and write of multiple pods.

** Refer to the capability of fully leveraging Kubernetes’ advantages in lightweight, automacy, standardization, and agility.

Overall, Kubernetes-native storage shows more advantages in the cloud-native environment.

As a leading enterprise Kubernetes-native distributed storage product, IOMesh helps customers build elastic, reliable, and highly performant storage resource pools for stateful applications, in a Kubernetes-native way. By reducing the cost and complexity of adopting persistent storage, IOMesh assists enterprises in accelerating cloud-native transformation.

To learn more about IOMesh, please refer to IOMesh Docs, and join the IOMesh community on Slack for more updates and community support.

Reference: